WYSIWYF Display

A Visual/haptic interface to virtual environment

(This project is no longer active)

Yasuyoshi Yokokohji, Ralph Hollis, and Takeo Kanade

Overview

Haptic interfaces have a potential application to training and simulation where kinesthetic sensation plays an important role along with the usual visual input. The visual/haptic combination problem, however, has not been seriously considered. Some systems have a graphics display simply beside the haptic interface resulting in a "feeling here but looking there" situation. Some skills such as pick-and-place can be regarded as visual-motor skills, where visual stimuli and kinesthetic stimuli are tightly coupled. If a simulation/training system does not provide the proper visual/haptic relationship, the training effort might not accurately reflect the real situation (no skill transfer), or even worse, the training might be counter to the real situation (negative skill transfer).

In our work, we are proposing a new concept of visual/haptic interfaces which we call a "WYSIWYF display." WYSIWYF means "What You See Is What You Feel". The proposed concept is a combination of vision-based object registration for the visual interface and encountered-type display for the haptic interface.

Visual interface

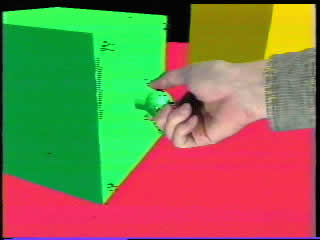

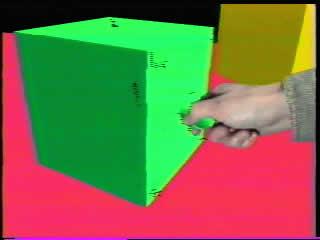

Currently we are using a hand-held camera/display for our visual interface. This consists of a liquid crystal TFT color display panel with a color CCD camera attached at the back plane of the LCD panel. The CCD camera takes an image of the haptic device including several markers attached to the device. The marker locations are tracked in real time. The relative pose between the camera and the haptic device is then estimated. Meanwhile a graphical image of a world populated by virtual objects is rendered based on the estimated pose. In parallel to the above process, the live video image of the user's hand is extracted using a Chroma Keying technique (the haptic device and background are all painted blue), and the extracted user's hand image is blended with the virtual environment graphics. Finally the blended image is displayed on the LCD panel.

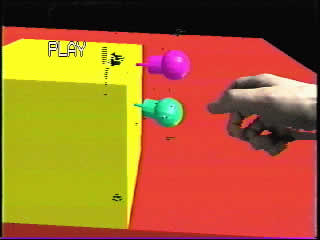

Original video image

Overlaid image demonstrating a correct visual/haptic registration

Final blended image

Since the virtual scene is rendered based on the current relative pose between camera and haptic device, the user can touch the actual haptic device exactly when his or her hand touches the virtual object in the display. Thus the user is unencumbered by the need to wear a data glove or other device. The user can see the virtual object and his or her hand at exactly the same location where the haptic device exists---in other words, "what you can see is what you can feel."

Haptic interface

We are introducing the encountered-type display concept for haptic interfaces. Unlike common techniques in use today, the user of this system need not manipulate the device all the time; instead the user's hand can "encounter" the device only when he or she touches an object in the virtual world (surface display mode). When the user touches the device, a force/torque sensor measures the applied force/torque exerted by the user and the resultant motion of the virtual object and contact forces between other virtual objects are calculated based on a rigid body model (admittance display mode). In our laboratory, a PUMA 560 robot arm is currently used for the haptic device.

demo movie of the encountered-type haptic display

Some examples

Cube

A simple frictionless virtual environment was built, where a 20 cm X 20 cm X 20 cm cube is on top of a flat table.

Manipulation of a virtual cube

demo movie of the cube manipulation

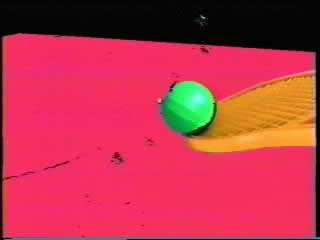

Virtual tennis

In this example, note that the ball is a virtual image but the racket is a real image. This example demonstrates that the user can interact with a virtual environment not only with his/her own hand but also with other real tools.

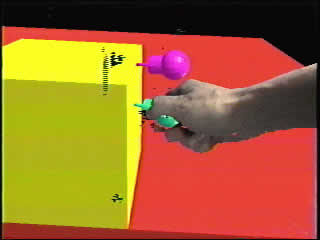

What you can see

What you are really doing

demo movie of the virtual tennis

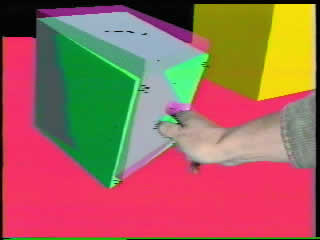

Skill training

One potential application of this system is for the training of visuo-motor skills, such as medical operations. The next figure shows a simple example of training. The user is trying to follow the pre-recorded motion of the expert displayed by a transparent cube. A week position servo can guide the user to the reference motion. Unlike just watching a video, the trainee can feel the reaction forces from the virtual environment while following the reference motion.

An example of skill training

Handling multiple tools

As discussed, our WYSIWYF display adopts the encountered-type haptic display. In the previous examples, the user could manipulate only one virtual object (a cube or a ball). In such a case, the haptic device can simply stay at the location where the virtual object exists. If there are more than one virtual object, however, the device has to change its location according to the user's choice.

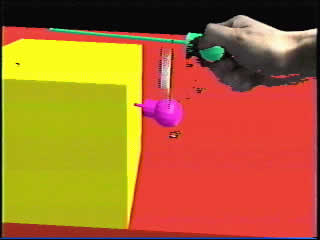

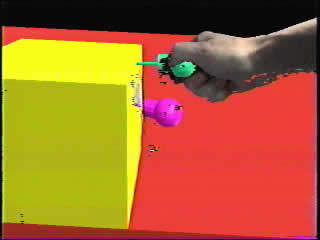

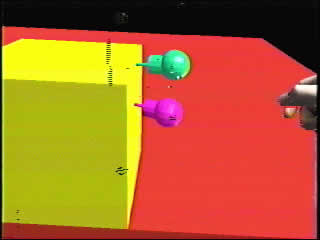

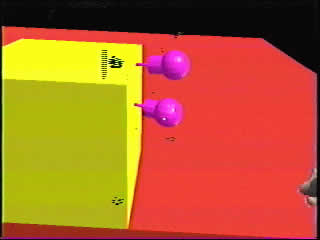

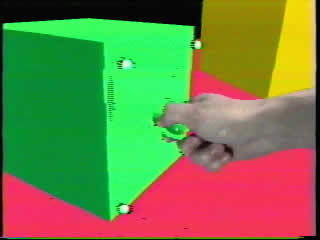

The next figures show an example of handling multiple tools, a sequence of changing the tools. There are two tools sticking in a piece of "virtual cheese". When the user decides to change the tool, the haptic device changes its location so that he/she can encounter the next tool. In this example, the user manually selects one of these tools by a toggle switch. When the selected tool gets ready to be encountered, its color changes from red to green. Ideally the system should detect his/her selection automatically by tracking the motion of his/her hand.

A sequence of changing the tools

A Fast Tracker

The first prototype system had a bottleneck in the vision-based tracking component and the performance was not satisfactory (slow frame rate and large latency). Therefore all demo movies above are taken in camera fixed mode, where the vision-based tracking functionality is used only at the initialization stage to get the correct registration and disabled in the run time, keeping the camera/display system stationary.

To solve this bottleneck, we have implemented a video-rate tracker, FUJITSU Tracking Vision (TRV), which has a capability to track more than 100 markers in video-rate (30 Hz). The estimated frame rate is 20 Hz. Although the system performance has been much improved, there is still a noticeable latency (about two or three frames). Further improvements will be necessary.

Tracking mode using a fast tracker

demo movie of tracking mode with a fast tracker

Future work and potential application

To display more sensitive and accurate haptic information, we are planning to attach our Magic Wrist to the tip of of the PUMA making a macro/micro configuration. Also , the hand-held camera/display would be replaced by a head-mounted unit in the future.

Potential applications are to teleoperation systems with large time delays, and rehearsal and training/simulation for tasks requiring visual-motor skills such as medical operation, space station maintenance/repair, and the like.

Publications

- "What you can see is what you can feel. -Development of a visual/haptic interface to virtual environment-," Y. Yokokohji, R. L. Hollis, and T. Kanade, IEEE Virtual Reality Annual International Symposium, March 30 - April 3, 1996, pp. 46-53.

- "Vision-based Visual/Haptic Registration for WYSIWYF Display," Y. Yokokohji, R. L. Hollis, and T. Kanade, International Conference on Intelligent Robots and Systems, IROS '96, Osaka, Japan, November 4-8, 1996, pp. 1386-1393.

- "Toward Machine Mediated Training of Motor Skills -Skill Transfer from Human to Human via Virtual Environment-," Y. Yokokohji, R. L. Hollis, T. Kanade, K. Henmi, and T. Yoshikawa, 5th IEEE International Workshop on Robot and Human Communication, RO-MAN '96, Tsukuba, Japan, November 11-14, 1996, pp. 32-37.